An exclusive article by Fred Kahn

Artificial intelligence is reshaping how financial institutions detect and prevent illicit financial flows. Advanced models are increasingly deployed to monitor suspicious transactions, yet their complexity brings an uncomfortable paradox. Highly accurate systems often operate like black boxes, difficult to explain to regulators or auditors. On the other hand, more transparent glass box approaches sometimes trade off performance for interpretability. This tension is not just a technological debate, it is a fundamental issue for anti money laundering frameworks and financial crime compliance. When billions of dollars are laundered each year through the financial system, the ability to explain and defend monitoring decisions becomes a central pillar of effective supervision.

The global regulatory landscape is evolving rapidly to address this challenge. Authorities require institutions to demonstrate not only that they can detect suspicious activity but also that they can explain how their systems arrived at those conclusions. This article explores the risks and realities of black box versus glass box approaches, using the lens of money laundering detection. It will assess the strengths, weaknesses, and regulatory implications, before providing a set of closing reflections on where compliance functions must steer their efforts.

Table of Contents

Money laundering detection and the black box dilemma

Money laundering remains one of the most significant threats to the integrity of financial markets. It enables organized crime, corruption, tax evasion, and terrorist financing. Traditional monitoring systems relied heavily on rule based logic, with if-then scenarios designed to capture anomalies like high value transfers, rapid movement of funds, or unusual customer behavior. These methods created predictable outputs, easy to explain to supervisors, but they often lacked the sophistication to uncover complex layering or cross border structuring.

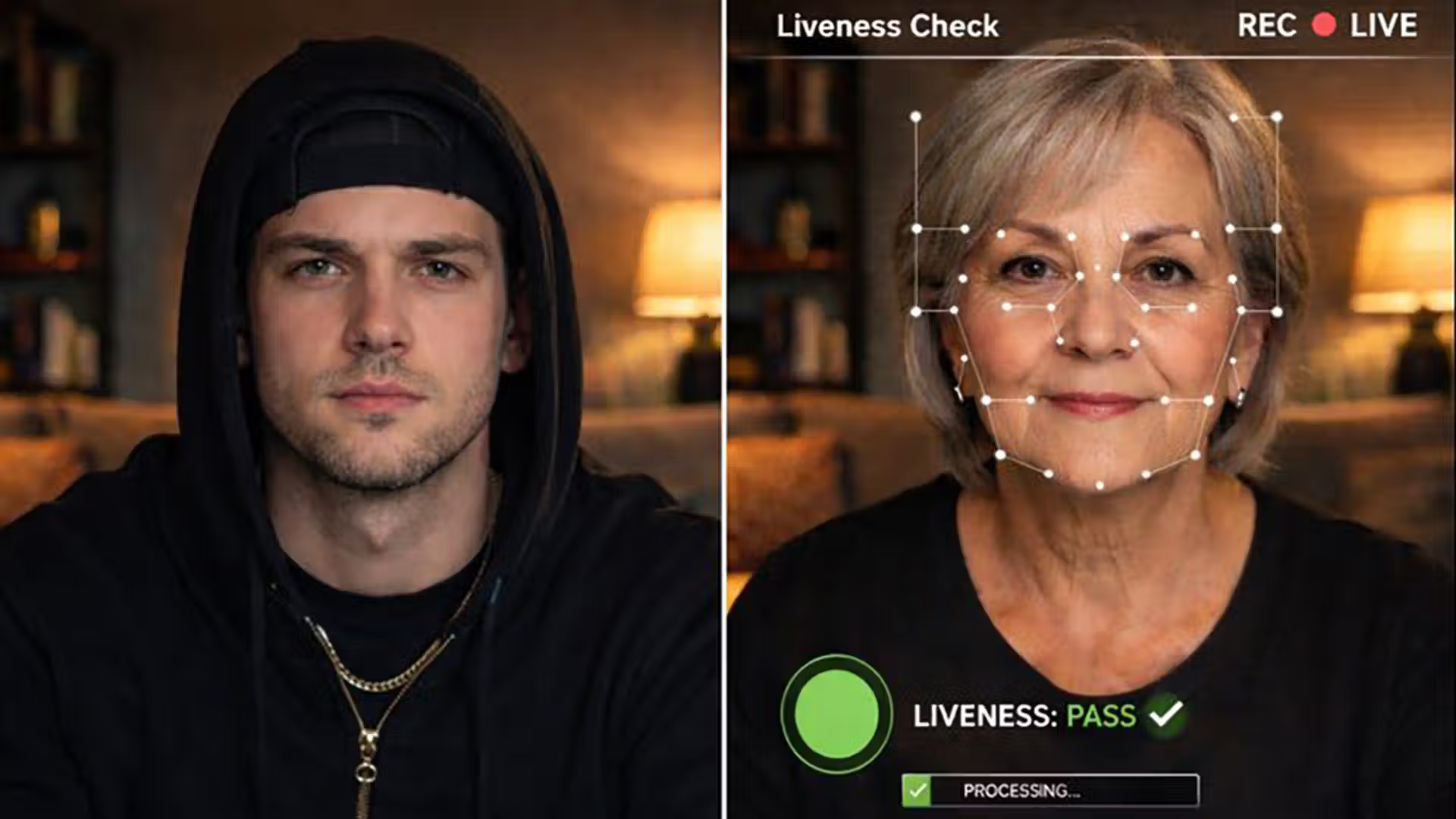

Enter the black box. Deep learning models, neural networks, and other complex algorithms promise far higher accuracy. They can spot hidden correlations across massive data sets that humans or rules could never detect. For example, a neural network can process years of transactional history, customer profiles, and external risk indicators to identify laundering patterns that mimic legitimate trade or investment flows.

However, the issue lies in transparency. When such a system alerts on a transaction, compliance officers may struggle to articulate why. The internal weights and non linear transformations of deep learning are notoriously difficult to explain. Regulators are unlikely to accept “the machine decided” as an explanation when billions of client assets are at stake. This is the core dilemma: accuracy versus explainability.

The risk is not theoretical. Institutions relying heavily on black box models face the possibility that their systems flag activity without clear rationale, leading to either over reporting, which clogs investigative pipelines, or under reporting, which creates compliance breaches. A lack of interpretability undermines the credibility of suspicious activity reports, weakening the chain of accountability.

Glass box models and explainable monitoring

Glass box systems, by contrast, aim to provide interpretability. These approaches rely on models where the decision making process is accessible and understandable. Decision trees, hybrid models combining rules and statistics, and tools like SHAP or LIME offer pathways to explainability. They allow investigators to see which features of a transaction contributed to a particular risk score.

The trade off is performance. Glass box models often cannot achieve the same detection rates as deep learning. Complex laundering techniques such as mirror trades, nested offshore structures, or use of digital assets may slip through. Yet regulators consistently emphasize the importance of transparency over pure performance. Financial institutions must be able to demonstrate the logic of their monitoring systems, especially during audits or enforcement proceedings.

Glass box monitoring aligns with the principle of accountability embedded in financial crime legislation. Supervisory authorities require a clear trail of evidence showing how alerts are generated, assessed, and escalated. Institutions that cannot provide such evidence risk penalties, reputational damage, or loss of license.

This is where hybrid strategies emerge. Some institutions combine machine learning with rule based overlays, ensuring that high risk scenarios remain governed by explainable parameters while advanced analytics refine the detection of subtle patterns. Others deploy explainability layers that sit atop black box models, translating outputs into human readable justifications. These methods attempt to balance accuracy with transparency, though challenges remain.

Compliance risks when transparency fails

The implications of non explainable monitoring go far beyond technical inconvenience. Failure to demonstrate clear monitoring logic can be interpreted as failure to maintain an effective anti money laundering program. This exposes institutions to significant compliance risk.

Regulators expect institutions to uphold principles of proportionality, accountability, and customer fairness. A black box model that cannot explain why certain customers are flagged risks accusations of bias or arbitrary treatment. Moreover, when false positives overwhelm compliance teams, resources are drained from pursuing genuine threats. Conversely, if false negatives slip through due to hidden biases, laundered funds may continue moving unchecked.

Recent enforcement trends show that supervisors increasingly scrutinize the governance of technology in AML programs. Policies, model risk management frameworks, and independent validation processes are no longer optional. Financial institutions must show how they test, calibrate, and explain their transaction monitoring systems. A model that cannot be audited becomes a liability.

Additionally, cross border financial institutions face the added burden of multiple regulatory regimes. A black box model that passes muster with one authority may fail under the stricter interpretability standards of another. Harmonizing monitoring practices across jurisdictions therefore requires careful design. Compliance leaders must weigh not only effectiveness but also regulatory expectations in every market where they operate.

Shaping the path forward

The trade off between black box and glass box approaches will not disappear. Money laundering methods continue to evolve, from trade based schemes to crypto asset layering. Monitoring systems must adapt quickly, often requiring the raw power of advanced analytics. Yet the legal and ethical demands of transparency cannot be ignored.

Financial institutions should pursue three key strategies. First, embed explainability by design. Rather than bolting on interpretability after the fact, monitoring systems should be architected with transparency as a core principle. Second, establish robust governance frameworks. Independent testing, periodic validation, and clear escalation procedures build the evidence regulators require. Third, invest in hybrid models. By combining high performing algorithms with explainability overlays and rule based controls, institutions can strike a workable balance.

Ultimately, the ability to fight money laundering depends not only on detection but also on accountability. A system that cannot be explained erodes trust with regulators, clients, and society. By embracing transparency while leveraging technological innovation, financial institutions can strengthen their AML programs against increasingly complex criminal networks.

Related Links

- Financial Action Task Force

- European Banking Authority AML Resources

- FinCEN Official Website

- UK Financial Conduct Authority Financial Crime

- AUSTRAC AML Guidance

Other FinCrime Central Articles About Transaction Monitoring

- Summer Series #12: AI and Analytics Usher in a New Era of Transaction Monitoring in AML

- Why Your Old Transaction Monitoring System Is Bleeding Your Budget Dry

- Optimizing Transaction Monitoring Parameters for Effective Compliance

Wondering which Transaction Monitoring solution truly fits your needs? Explore the FinCrime Central feature-based AML Solution Provider Directory.

Some of FinCrime Central’s articles may have been enriched or edited with the help of AI tools. It may contain unintentional errors.

Want to promote your brand, or need some help selecting the right solution or the right advisory firm? Email us at info@fincrimecentral.com; we probably have the right contact for you.