Artificial intelligence has reshaped every aspect of financial services, and with that transformation has come an alarming surge in digital financial crime. Canada’s financial intelligence agency, FINTRAC, and the Global Risk Institute (GRI) jointly hosted the second Financial Industry Forum on Artificial Intelligence (FIFAI II) in late 2025 to examine how AI is influencing fraud, sanctions evasion, insider threats, and money laundering. The discussions revealed both unprecedented opportunities and critical weaknesses in the way financial institutions, regulators, and policymakers approach risk management.

Table of Contents

The rise of AI and financial crime

While AI is now embedded across transaction monitoring, sanctions screening, and behavioral analytics, its misuse by criminals is accelerating faster than most institutions can adapt. Sophisticated deepfakes, synthetic identities, and automated fraud-as-a-service tools are eroding traditional controls. FIFAI II participants agreed that without coordinated investment, shared intelligence, and harmonized regulation, financial institutions risk falling permanently behind threat actors who exploit AI for profit and concealment.

Canada’s challenge mirrors global AML pressures: constrained budgets, legacy systems, and uneven AI literacy. Yet the forum’s outcome points toward an evolving model of collaboration—one that unites financial institutions, regulators, and government under a shared responsibility framework.

AI adoption and the evolving AML risk landscape

Financial crime has evolved into a data war, and AI sits at its center. Participants in the forum stressed that while AI can enhance detection and prevention, its deployment across Canada’s financial sector remains fragmented. About half of the experts surveyed identified cost and resource limitations as the main barrier to implementing AI solutions.

The first major risk highlighted was constrained investment. Many institutions still treat AI as a cost-cutting mechanism rather than a strategic deterrence tool. True resilience requires prioritizing investments that improve effectiveness before efficiency. When firms deploy AI for trend-following rather than purpose, they risk diluting their controls and producing misleading outputs. The consensus among experts was clear: measurable impact and return on risk mitigation must drive AI strategy, not hype.

Another persistent constraint involves legacy infrastructure. A significant number of Canadian financial institutions operate on outdated platforms incompatible with modern AI systems. Modernization, though expensive, is no longer optional. Threat actors exploit these vulnerabilities, using the slower adoption cycle to infiltrate systems and compromise data.

The shortage of AI expertise compounds the issue. Smaller institutions face not only financial limitations but also limited access to qualified data scientists and compliance technologists. This imbalance increases systemic risk by turning smaller firms into entry points for cybercriminals. Participants emphasized that AI adoption cannot succeed without building literacy across all levels—from compliance analysts to board members.

Government regulators also face similar constraints. Resource scarcity within agencies slows AI integration into public-sector supervision and enforcement. Without comparable technological agility, authorities risk being outpaced by both the private sector and criminal organizations.

New vulnerabilities in the AI era

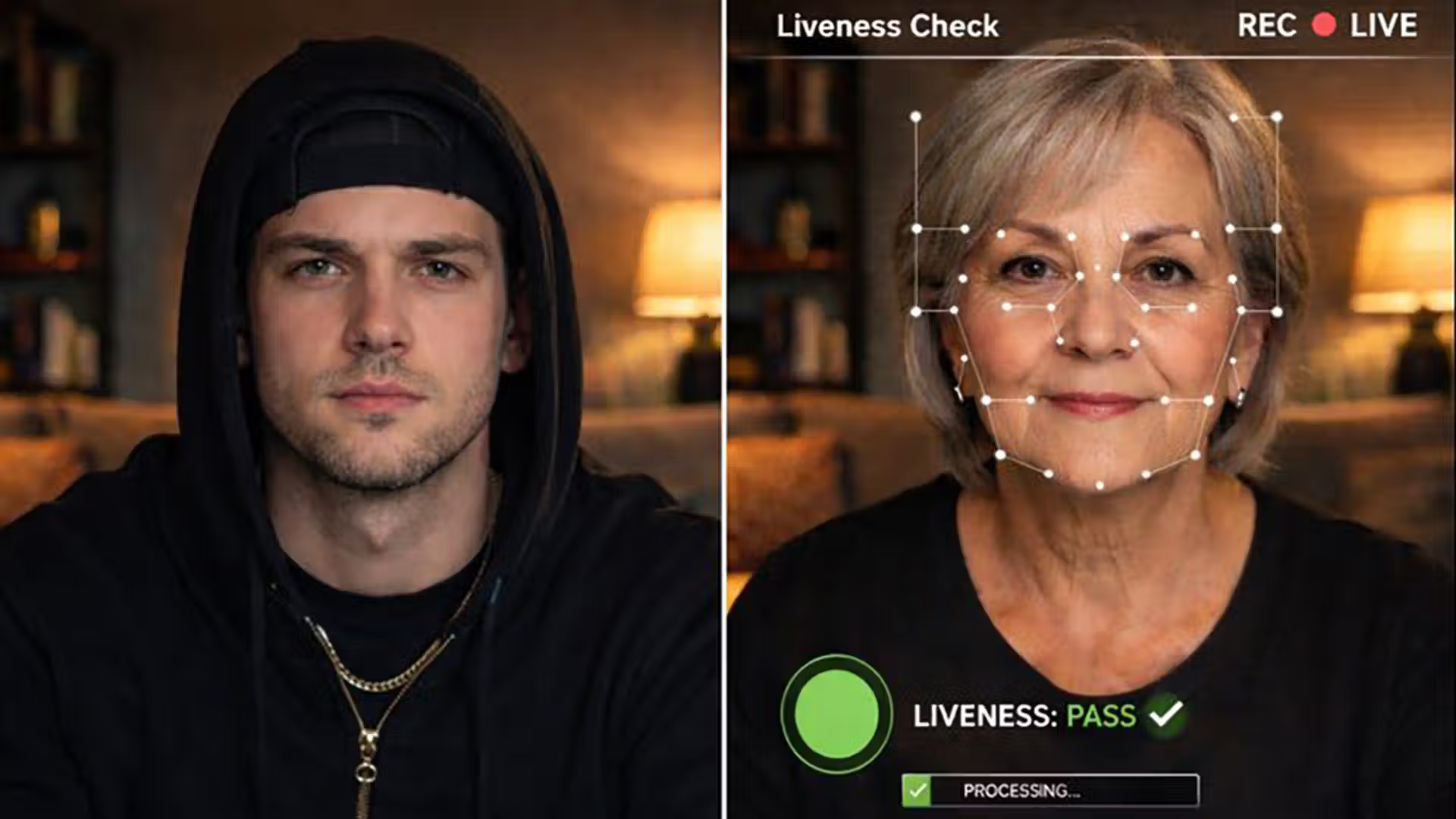

Beyond the question of resources, FIFAI II exposed an alarming trend in the sophistication of AI-enabled financial crime. The convergence of social engineering, synthetic identities, and automated scripts has made fraud more personal, scalable, and resilient.

AI-fueled cyberattacks now exploit personalization, often combining public social data with real-time behavioral prediction. Victims receive highly convincing communications tailored by algorithms, and many fall for schemes that mimic their banking history or tone of voice. This manipulation erodes public confidence in legitimate institutions and raises compliance costs dramatically.

Cross-border vulnerabilities are equally concerning. Criminal networks can now deploy AI models from foreign jurisdictions, masking beneficial ownership, laundering illicit funds through shell structures, or simulating legitimate transaction patterns. Detection remains difficult when datasets are incomplete or fragmented across borders. Limited information exchange between regulators in different provinces and countries amplifies the problem.

Insider threats have also evolved. As institutions integrate AI into internal systems, the risk that employees or contractors exploit privileged access has increased. Some insiders deliberately manipulate datasets or train algorithms with corrupted inputs, undermining model integrity. Others extract or sell sensitive data to external threat actors.

Trust itself has become a casualty. As deepfakes and synthetic communications proliferate, even legitimate internal communications or customer interactions can appear suspect. The financial sector’s dependency on digital authentication creates a fragile environment where both employees and clients must continually question what they see.

Low barriers, high damage

The democratization of AI technologies has effectively leveled the playing field for criminals. Previously, large-scale fraud required technical expertise or organized infrastructure. Now, small groups—or even individuals—can leverage publicly available AI tools to commit complex crimes. The rise of fraud-as-a-service exemplifies this shift: ready-made AI kits sold on dark web markets allow users to automate phishing campaigns, identity fabrication, and fund transfers with minimal technical knowledge.

The financial imbalance between attackers and defenders is stark. It costs threat actors little to deploy an AI-powered scheme, yet institutions must invest heavily to prevent or trace it. This asymmetry encourages criminal innovation and increases the frequency of attacks. AI’s ability to remove language barriers further enhances cross-border crime, enabling actors to operate seamlessly across regions without linguistic constraints.

Experts at the forum underscored that while criminals exploit AI’s low entry costs, financial institutions face complex regulatory hurdles. The Canadian AML framework, divided among federal and provincial jurisdictions, lacks unified oversight. No single authority currently covers detection, reporting, prosecution, and conviction end to end. The absence of harmonized enforcement limits the country’s capacity to respond swiftly to emerging threats.

A beneficial ownership registry—launched federally in January 2024—was cited as progress but insufficient. Gaps at the provincial level allow shell entities to remain hidden. Participants urged deeper intergovernmental alignment to ensure transparent ownership information and facilitate coordinated enforcement.

Data, governance, and systemic weaknesses

AI’s effectiveness depends entirely on data quality. Yet Canada’s financial institutions still struggle with inconsistent data standards, fragmented systems, and incomplete records. Models trained on poor-quality data can reinforce bias or miss critical red flags, especially in cross-institutional monitoring.

Legacy systems exacerbate this issue. Institutions attempting to retrofit AI tools onto outdated infrastructure face compatibility problems that extend implementation timelines. Criminals exploit this lag, targeting systems mid-transition when controls are inconsistent.

Data governance emerged as another major vulnerability. The absence of unified frameworks for ownership, access, and accountability increases the likelihood of insider manipulation. Some financial institutions mistakenly relax traditional controls when adopting AI, assuming the technology itself provides inherent security. The forum cautioned against this misconception, noting that human oversight remains indispensable.

Robust governance frameworks are the foundation of AML resilience. Boards must require clear documentation of AI systems, transparent decision-making logs, and auditable model behavior. Without these safeguards, institutions risk losing regulatory confidence and public trust simultaneously.

The forum’s participants also noted that even advanced AI models cannot compensate for inadequate data integrity. Institutions must invest continuously in cleansing, consolidating, and standardizing datasets. The ability to trace the origin, structure, and transformation of financial data is essential to validate AI outputs and ensure regulatory compliance.

Strengthening defences through collaboration

The most promising outcomes of FIFAI II were the recommendations on best practices and structural reforms. The path forward requires simultaneous modernization, collaboration, and accountability across both private and public sectors.

Effective governance remains the cornerstone. Institutions must continuously update control frameworks to integrate AI-specific oversight, ensuring that existing compliance systems adapt to new risks. Governance should cover not only technology but also ethics, privacy, and explainability—so that decision-makers fully understand how algorithms influence AML outcomes.

Data quality and accessibility are equally critical. Expanding and interlinking corporate registration databases would strengthen beneficial ownership identification. Real-time data integration across provinces and between public and private entities could substantially reduce blind spots exploited by criminals.

Investment in AI tools must focus on tangible risk reduction. Pattern recognition, behavioral analytics, and crypto transaction monitoring are key areas where AI can detect complex money-laundering typologies. However, experts stressed that financial institutions should not overemphasize cost efficiency at the expense of effectiveness.

Education and training form the human foundation of resilience. Upskilling employees, regulators, and even customers in AI literacy enhances the collective capacity to recognize and mitigate risk. Boards and senior management must also acquire enough technical understanding to challenge or approve AI-driven decisions responsibly.

Scaling AI deployment should follow an incremental approach—pilot, operational, and strategic phases. This gradual model allows institutions to test systems safely, evaluate ROI, and prevent large-scale vulnerabilities during transition.

Strategic opportunities emerging from FIFAI II

The forum’s final deliberations pointed toward a national transformation. Canada is moving to institutionalize the fight against AI-driven financial crime through both technological modernization and structural reform.

Modernizing infrastructure is the first step. By updating legacy systems, standardizing data formats, and consolidating sources, financial institutions can enable AI systems to operate in real time. Real-time risk assessment during onboarding or transaction monitoring would drastically improve detection speed and accuracy. Automated suspicious transaction reporting, powered by AI pattern recognition, would relieve analysts of manual workload and improve quality consistency.

The second opportunity lies in organizational transformation. Institutions must shift from fragmented, short-term AI projects to integrated, strategic deployments. Establishing cross-functional teams combining compliance, data science, and cybersecurity ensures that financial crime prevention is treated as an enterprise priority rather than a side experiment.

For government agencies, collaboration across pillars—finance, justice, and technology—is imperative. Shortly after the workshop, Canada announced the creation of a National Anti-Fraud Strategy and a dedicated Financial Crimes Agency. This centralization aims to coordinate intelligence and streamline enforcement, directly addressing the coordination gaps highlighted by the forum.

Regulatory innovation was another priority. The forum strongly endorsed the creation of AI sandboxes—controlled environments where institutions can test new algorithms without fear of punitive consequences. This model, already successful in cybersecurity, would allow experimentation while maintaining supervision and ethical standards.

Participants also called for reforming ownership definitions. Reducing the 25% beneficial ownership threshold and expanding it to cover control and influence would enhance transparency across complex corporate structures. Such a change would align Canada’s standards more closely with those adopted in Europe and improve cross-border enforcement.

Privacy laws were acknowledged as a double-edged sword. While protecting citizens’ rights, strict privacy regimes can restrict the timely exchange of critical data between institutions and regulators. A more balanced framework may be required to enable rapid collaboration while maintaining fundamental privacy protections.

Building the three-pillar model

The workshop concluded that lasting success depends on a collaborative regime built on three pillars: industry, regulators, and law enforcement. Joint intelligence sharing emerged as the most powerful instrument against AI-enabled financial crime. Sixty percent of participants supported the creation of structured channels for continuous information exchange.

This model envisions near-real-time communication between compliance teams, national regulators, and investigative agencies. Shared data on typologies, suspicious patterns, and network behavior would allow all participants to act faster and reduce duplication. Collaboration must also extend internationally, as financial crime networks are rarely confined to one jurisdiction.

The upcoming creation of Canada’s Financial Crimes Agency represents a tangible move toward this model. By centralizing expertise, consolidating data, and coordinating cross-pillar responses, the agency could become the focal point of Canada’s AML and AI governance ecosystem.

Canada’s next frontier in AML modernization

The discussions at FIFAI II illustrated that the battle against financial crime in the AI era is not just technical—it is systemic. Without structural reform, data standardization, and cross-sector cooperation, AI’s potential will remain underutilized while criminals continue to exploit it.

Canada’s decision to establish a National Anti-Fraud Strategy and a Financial Crimes Agency shows recognition of the need for central leadership. These initiatives, combined with continued investment in AI literacy, ethical frameworks, and sandbox testing, could position the country as a model for responsible AI in AML compliance.

The forum closed with an acknowledgment that no single entity can combat AI-enabled financial crime alone. The pace of technological evolution demands joint investment, transparency, and accountability across public and private sectors. Continued engagement between FINTRAC, GRI, and the financial industry will be essential to maintain momentum.

Ultimately, Canada’s ability to secure its financial system will depend on how effectively it integrates AI innovation with governance discipline. With every advancement in technology comes a new layer of risk—but also the opportunity to outpace those who exploit it.

Related Links

- FINTRAC – Financial Transactions and Reports Analysis Centre of Canada

- Department of Finance Canada – Financial Crimes Agency Announcement

- Global Risk Institute – FIFAI II Reports

- Corporations Canada – Beneficial Ownership Registry

- Office of the Superintendent of Financial Institutions (OSFI)

Other FinCrime Central Articles About AI and AML

- NY Regulators Demand Blockchain Analytics to Shield Banks from Laundering

- Why AI Explainability Is Becoming a Regulatory Imperative in AML

- Summer Series #14: Breakthroughs and Barriers As GenAI in AML Reshapes Compliance

Source: The Global Risk Institute

Some of FinCrime Central’s articles may have been enriched or edited with the help of AI tools. It may contain unintentional errors.

Want to promote your brand, or need some help selecting the right solution or the right advisory firm? Email us at info@fincrimecentral.com; we probably have the right contact for you.