An exclusive article by Fred Kahn

Many financial institutions are unsure whether their vendors rely on genuine intelligence or repackaged rule libraries dressed up as advanced innovation. The challenge has become more pressing as regulators highlight persistent gaps in automated monitoring quality across banks of all sizes. Vendors frequently promote solutions framed as transformative when the underlying logic aligns with approaches that have existed for decades. Compliance teams face pressure from boards and supervisors to demonstrate meaningful upgrades to detection capability. This environment leads to uncertainty around what technology delivers measurable benefits and what simply repeats traditional monitoring techniques with new branding.

Table of Contents

AI evaluation

The rapid expansion of automated monitoring tools across global finance has created a crowded market where many providers claim to deliver breakthrough intelligence. Institutions reviewing these platforms often find that the promised sophistication masks familiar rule sets that flag static thresholds, repeated behaviours, or fixed patterns. Marketing language often presents these functions as adaptive even when the software responds in the same way regardless of context or historic performance. The result is an inflated perception of capability that does not match practical outcomes.

Regulators have long recognised that rule-based systems remain valuable for well-understood typologies. Many money laundering cases still feature predictable events such as sudden activity spikes or cash-based structuring, and rules can efficiently identify these situations. However, many vendors apply cosmetic labels suggesting that the software evaluates patterns through learning when the tool simply follows predefined sequences. Institutions that assume they are acquiring adaptive capability may therefore fail to calibrate expectations, leading to consistent false positive saturation or gaps in nuanced detection.

Financial supervisors have repeatedly encouraged banks to understand the functional foundations of automated tools. Documentation from international standard setters notes that institutions remain accountable for understanding how their systems produce alerts. When the underlying method is built on rigid logic, the bank must avoid presenting the system as flexible or autonomous. Failing to understand the architecture can result in control weaknesses that emerge during thematic reviews or enforcement actions.

A major complication arises when institutions layer additional rules on top of vendor-supplied libraries, creating a dense mesh of triggers that overlap or contradict one another. Reviews by supervisory bodies show that banks sometimes struggle to justify the volume of scenarios running in parallel, particularly where older rules remain active despite no evidence of ongoing relevance. This complexity can be misinterpreted as intelligence, although it simply reflects incremental additions over several years. Institutions with fragmented rule inventories often face challenges when independent reviewers assess the rationale behind each scenario.

A clear difference exists between tools that adjust thresholds based on historical patterns and tools that merely allow users to modify parameters manually. Many vendors blend these concepts during demonstrations, which can confuse procurement teams that lack technical clarity on how true adaptation works. An institution seeking measurable improvements must therefore request precise explanations of how the system learns, whether it updates autonomously, and how governance controls supervise model drift, performance degradation, or biased outcomes. Without this transparency, the institution risks adopting a package that behaves identically to a legacy system under a different label.

Authorities in multiple jurisdictions emphasise the significance of model governance frameworks when evaluating systems described as intelligent. Periodic validation, explainability testing, and traceable documentation are essential when a bank relies on outputs generated by complex algorithms. Institutions that operate tools with opaque processes cannot demonstrate compliance expectations regarding accountability or technical understanding. This concern extends to scenarios where the vendor positions simple rules as advanced analytics, since the bank may adopt a misplaced confidence in detection strength without having the infrastructure to confirm effectiveness.

The emergence of international guidance on digital transformation in financial crime controls highlights the need for clear oversight. Banks are encouraged to recognise that advanced systems require ongoing monitoring, and that procurement choices must align with internal capabilities. These expectations exist because intelligence-based detection influences customer risk evaluation, escalation processes, and law enforcement reporting. Misinterpreting basic logic as adaptive learning may therefore lead to misplaced reliance on alerts that lack nuance and depth.

Many compliance officers report that they face difficulty reconciling marketing claims with operational performance after implementation. Alert volumes often increase without corresponding improvements in useful outcomes, indicating that rule densities remain the primary driver of activity. A genuine intelligent system should theoretically reduce unnecessary alerts by recognising patterns that indicate harmless behaviour, yet many institutions report no such effect. This discrepancy highlights why evaluation processes must probe beyond promotional summaries.

Institutions that seek to differentiate between real intelligence and rebranded logic must perform practical testing using anonymised historic data. By reviewing hit rates, scenario explanations, change logs, and performance metrics, the bank can identify whether the system produces contextually aware outcomes or simply applies fixed steps to every case. If the vendor cannot demonstrate how detection quality improves over time, the system most likely remains rooted in predefined triggers rather than in adaptive principles.

Market behaviour shaping perception

The competitive environment among technology providers encourages assertive marketing that often stretches terminology beyond its conventional meaning. Many vendors use language associated with learning to describe rule frameworks that allow conditional branching or weighted thresholds. These techniques have existed for many years and provide useful detection methods, but they do not meet the expectations associated with adaptive intelligence. As a result, the market becomes saturated with ambiguous messaging that makes comparison difficult.

Procurement teams face challenges when distinguishing between platforms because demonstrations usually display polished dashboards, colourful graphs, and dynamic interfaces. Visual presentation can create the impression of sophistication even when the underlying mechanics remain unchanged from earlier systems. Without detailed questions, institutions may assume they are acquiring tools that uncover rare or unusual activity when they are actually purchasing platforms that generate volumes based on simple parameters.

Supervisory bodies have warned that institutions must maintain responsibility for the performance of their systems regardless of vendor claims. A bank cannot rely on glossy categorisations to justify operational decisions, especially where alerts form the basis for suspicious activity reporting obligations. Authorities have repeatedly stressed that banks must understand the technical behaviour of their tools, including the data sources, logic construction, escalation pathways, and validation procedures. Ambiguity around these topics increases the risk of control weaknesses that can lead to regulatory findings.

Financial institutions also encounter tension between commercial pressures and compliance obligations. Boards may expect rapid enhancement of monitoring capacity and may be inclined to accept technological claims at face value. Compliance departments, however, must interpret supervisory expectations, ensuring that decisions reflect real detection improvements rather than cosmetic upgrades. This internal tension can lead to rushed procurement cycles where inadequate testing occurs.

The growth of cross-border regulatory cooperation has further heightened expectations for clarity. Authorities now frequently compare monitoring standards across multiple jurisdictions, requiring banks to articulate their detection logic with precision. Broad descriptions of intelligence-driven monitoring no longer satisfy examination teams searching for quantifiable evidence. For institutions operating globally, these pressures underscore the need for transparent documentation of every scenario and model in use.

When vendors oversell the capability of fixed logic frameworks, institutions may overestimate their ability to uncover sophisticated schemes that shift tactics frequently. Cases involving complex laundering structures often show that criminals adapt to predictable thresholds, splitting activity or redirecting flows to avoid detection. A system that lacks adaptive capacity cannot adjust naturally to these movements, leaving blind spots that criminals can exploit. Banks that believe their monitoring is more capable than it truly is may overlook key vulnerabilities.

Procurement teams also need to examine how vendors describe calibration. A platform that introduces thousands of predefined rules may appear comprehensive, yet this volume often causes instability and inflated alert numbers. Without intelligent prioritisation, teams must manually triage alerts without context, reducing the potential operational benefits. Understanding whether calibration reflects genuine pattern recognition or simply adjusting threshold sensitivity remains essential.

Distinguishing adaptive capability from rule expansion

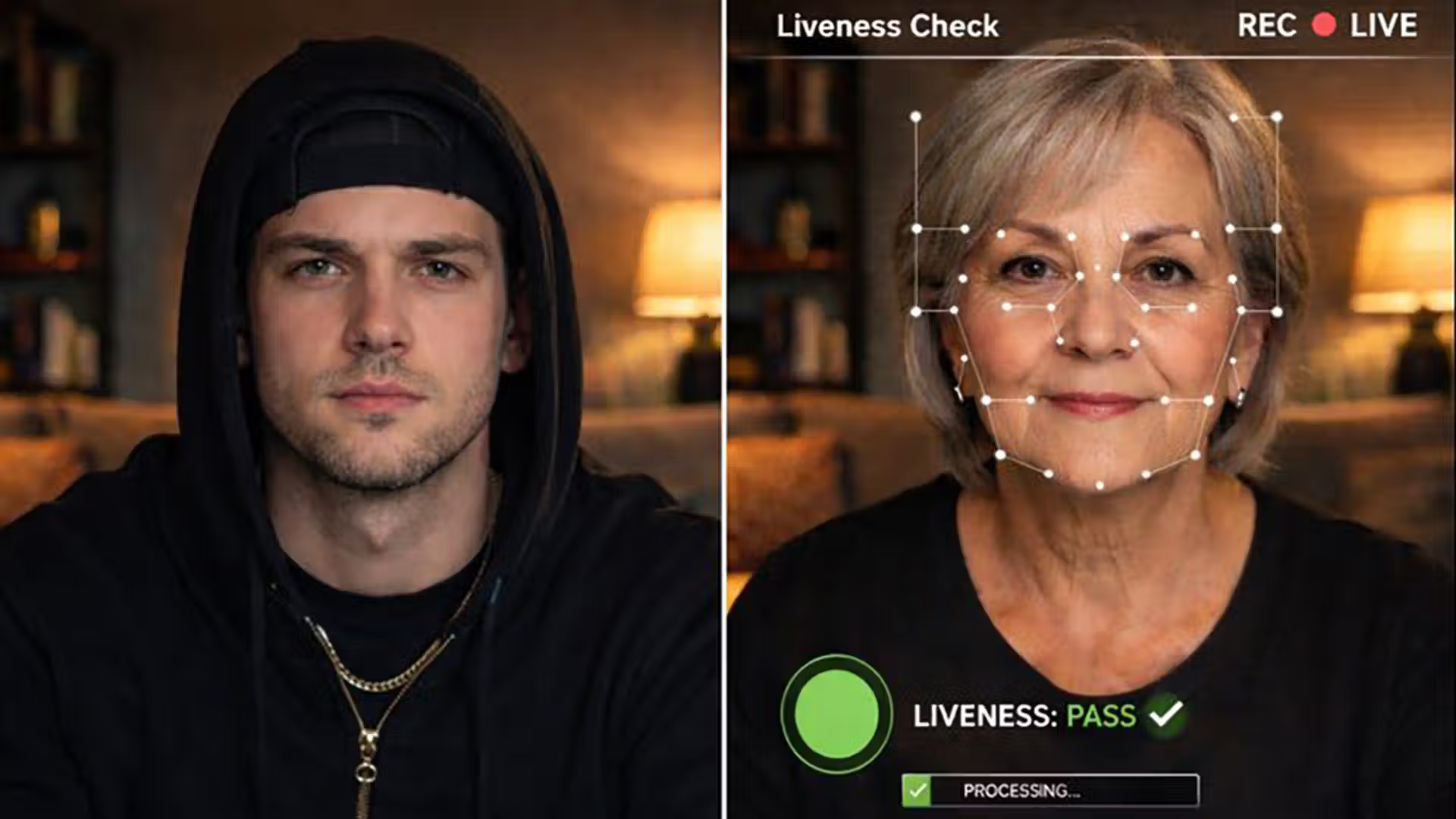

Evaluating a monitoring system requires a detailed analysis of how the tool handles new behaviour that deviates from historic patterns. Institutions must observe whether the system adjusts scores or thresholds automatically or whether teams must manually rewrite logic. A system that truly learns should attribute weight to customer context, transactional behaviour, historical interactions, and evolving patterns. If the tool responds identically regardless of context, it demonstrates rule-based thinking even if marketed as intelligence.

Historically, many enforcement actions have revealed that banks relied heavily on static rules without periodic review. Authorities often note that outdated thresholds remained active for years, failing to capture new techniques used by criminal groups. As financial flows evolve, institutions require systems capable of detecting anomalies that do not fit existing categories. Learning based models encourage detection of unusual combinations of activities, while rigid rules identify only predefined patterns. This distinction is central to understanding whether a technology provider delivers meaningful improvements.

Testing should include scenarios where input data presents multi-dimensional behaviour. A genuine adaptive tool should incorporate various signals, adjusting its view when conflicting or reinforcing information appears. Conversely, a rule-based platform might simply activate multiple rules in parallel, generating a flood of alerts without context. Compliance teams must request evidence of the system’s ability to distinguish risk relevance rather than relying solely on volume.

Institutions should also examine how the tool treats feedback loops. Learning based systems refine their output through the review of previous alerts, closing the cycle between model output and investigator judgment. Rule-based tools cannot incorporate such feedback without manual redesign. Many vendors describe manual tuning as evidence of learning, yet this represents human intervention rather than automated improvement. For procurement decisions, understanding the difference between system-led and analyst-led adaptation is essential.

Governance structures must support whichever system is chosen. For rule-based models, institutions need inventories, version histories, and justification for every scenario. For advanced systems, governance teams must perform validation, bias testing, and change monitoring. When banks fail to differentiate these requirements, regulators often identify gaps in oversight. It is therefore critical that compliance teams align procurement choices with their governance capability to avoid operational weaknesses.

The increasing regulatory attention on explainability adds further complexity. Even adaptive systems must provide clear, auditable explanations of why a particular alert was generated. If the tool cannot articulate the reasoning in a format that investigators understand, the institution may struggle to meet supervisory expectations for transparency. Banks must balance the ambition of adopting advanced tools with the practical reality of documenting their behaviour to regulators.

To ensure clarity, institutions should request detailed architecture diagrams, algorithm documentation, dataset inventories, and system behaviour logs from vendors. These materials allow internal teams to understand whether the system generates meaningful insight or simply repackages static logic behind an attractive interface. Many institutions discover that significant portions of vendor offerings rely on conventional methods despite promotional claims that suggest otherwise.

Procurement teams should also ask for independent evaluation results, peer review documents, and references from institutions of similar size or profile. External validation helps verify whether the system performs consistently across environments. Additionally, testing should include multi year historical data to observe whether the tool identifies hidden patterns rather than exclusively relying on predefined triggers. A system that uncovers previously unreported anomalies may demonstrate characteristics of adaptation, while a system that simply flags known patterns indicative of rule-based functioning requires careful scrutiny.

How institutions can respond responsibly

A sustainable approach to automated monitoring requires transparency, robust governance, and clear alignment between capabilities and business needs. Institutions should build procurement frameworks that evaluate technology claims based on measurable outputs rather than marketing language. This includes requiring detailed demonstrations where vendors explain how the system processes inputs, how scoring works, how updates occur, and how oversight teams validate performance. Banks must understand each component to ensure that detection aligns with regulatory expectations.

Compliance departments should coordinate early with technology teams, risk owners, data specialists, and legal staff. This ensures that system behaviour matches the institution’s risk appetite, operational structure, and jurisdictional obligations. Governance committees must retain oversight of changes to rule sets, data sources, scoring methods, and model architecture. By maintaining controlled processes, the institution can ensure that technology does not drift from its intended purpose or introduce unintended bias.

Institutions should document every step of their evaluation process. Auditable records demonstrate to supervisors that procurement choices were based on evidence and that the institution understood the technological foundations of its systems. This documentation becomes essential during inspections, thematic reviews, or investigations into system failures. When institutions can articulate the justification behind their detection methods, they reduce the likelihood of findings related to inadequate oversight.

Ongoing testing is also critical. Banks should conduct scenario testing, data quality reviews, and performance monitoring to ensure that systems continue to operate effectively as customer behaviour and financial flows evolve. This testing should include both rule-based and adaptive models, since both require verification to ensure effectiveness. Regular evaluation ensures that institutions can identify weaknesses before they become compliance failures.

Institutions must also be realistic about the value of automation. Even advanced models cannot replace the professional judgement of investigators, particularly in cases involving complex cross-border activity, nested transactions, or unusual patterns of behaviour. Technology should support investigators by highlighting areas of potential risk, but it cannot independently determine intent. Recognising these limits helps institutions maintain balanced expectations.

Supervisory engagement plays a key role in shaping future adoption. Banks that maintain transparent dialogue with authorities about new technologies avoid misunderstandings and demonstrate proactive compliance. This collaboration can also help institutions interpret regulatory expectations for new tools. By sharing information, banks support broader industry understanding of effective technological controls.

Elevating staff understanding of these tools is equally important. Training programs should explain how detection works, how alerts are generated, and how to interpret system outputs. Investigators who understand system logic are better equipped to challenge unexpected behaviour, identify gaps, or request improvements. Training builds a workforce capable of managing both rule-based and adaptive systems in a complex regulatory environment.

Institutions that adopt a measured, informed approach to monitoring technology ultimately build stronger resilience. By emphasising transparency, documentation, testing, and collaboration, they avoid reliance on misleading claims and instead focus on demonstrable capability. This balanced perspective ensures that the organisation aligns its detection environment with real risk and regulatory expectations.

Key Points

• Many vendors market rigid logic as adaptive systems

• Regulators expect banks to understand the mechanics behind their monitoring tools

• Institutions must evaluate detection capability using measurable performance tests

• Governance frameworks remain essential regardless of system type

• Adaptive capacity requires explainability, validation, and monitoring

Related Links

- FATF Guidance on Digital Transformation

- FATF Risk-Based Approach for the Banking Sector

- European Banking Authority Guidelines on Internal Governance

- Basel Committee Principles for Effective Risk Data Aggregation

- Financial Stability Board Reports on Supervisory Technology

Other FinCrime Central Articles About AI

- Why AI Explainability Is Becoming a Regulatory Imperative in AML

- AI and Analytics Usher in a New Era of Transaction Monitoring in AML

- Geopolitics, Financial Crime, and the AI Revolution: The New Battlefield for Financial Services

Some of FinCrime Central’s articles may have been enriched or edited with the help of AI tools. It may contain unintentional errors.

Want to promote your brand, or need some help selecting the right solution or the right advisory firm? Email us at info@fincrimecentral.com; we probably have the right contact for you.