Napier AI has published its 2025–2026 benchmark report on anti-financial-crime performance, delivering one of the most comprehensive analyses of where global compliance frameworks succeed and where they quietly fail. The study consolidates data from dozens of jurisdictions to compare how much countries spend on anti-money-laundering enforcement versus how effectively those investments translate into detection and prevention. The findings make clear that the industry’s current trajectory is unsustainable.

The report confirms that compliance costs continue to rise faster than risk mitigation benefits. It exposes an imbalance between process and outcome, showing that most financial institutions are burdened by inefficient alerting systems, weak data governance, and duplicated investigative effort. Yet it also identifies a path forward. Through explainable intelligence, transparent metrics, and continuous data feedback, institutions can not only reduce false positives but also demonstrate measurable value to supervisors and shareholders alike.

This article decodes the core themes of Napier AI’s findings, tracing the operational implications for banks, payment processors, insurers, and asset managers. It explores how leaders can convert analytics into accountability, establish metrics that reflect real effectiveness, and align their programs with evolving global legal standards.

Table of Contents

Global AML performance index as a mirror of efficiency

Napier AI’s analysis compares national financial systems by correlating estimated illicit-flow volumes with each country’s economic output and compliance expenditure. The results reveal a striking misalignment between spending and impact. Wealthier jurisdictions may spend billions annually, yet some smaller, data-driven economies outperform them in terms of laundering deterrence per dollar spent.

The index functions less as a ranking and more as a diagnostic instrument. It highlights structural inefficiencies that persist across institutions regardless of size or sector. Many programs still measure success by inputs—number of reports filed, alerts cleared, or staff trained—rather than by outcomes such as confirmed cases, prevented losses, or collaborative results with authorities.

When analyzed through that lens, even technologically mature markets appear overextended. Systems that rely on rigid rule sets create high alert volumes but low investigative value. Meanwhile, countries emphasizing data accessibility, real-time feedback between regulators and industry, and proportional supervision achieve significantly better detection ratios.

For boards, the message is clear: effective AML is not about how much is spent but how precisely each dollar targets measurable risk reduction. Programs that tie expenditure to observable impact—conversion rates, case quality, timeliness—deliver compounding returns. They also strengthen credibility with regulators who increasingly expect demonstrable proof of effectiveness rather than mere compliance activity.

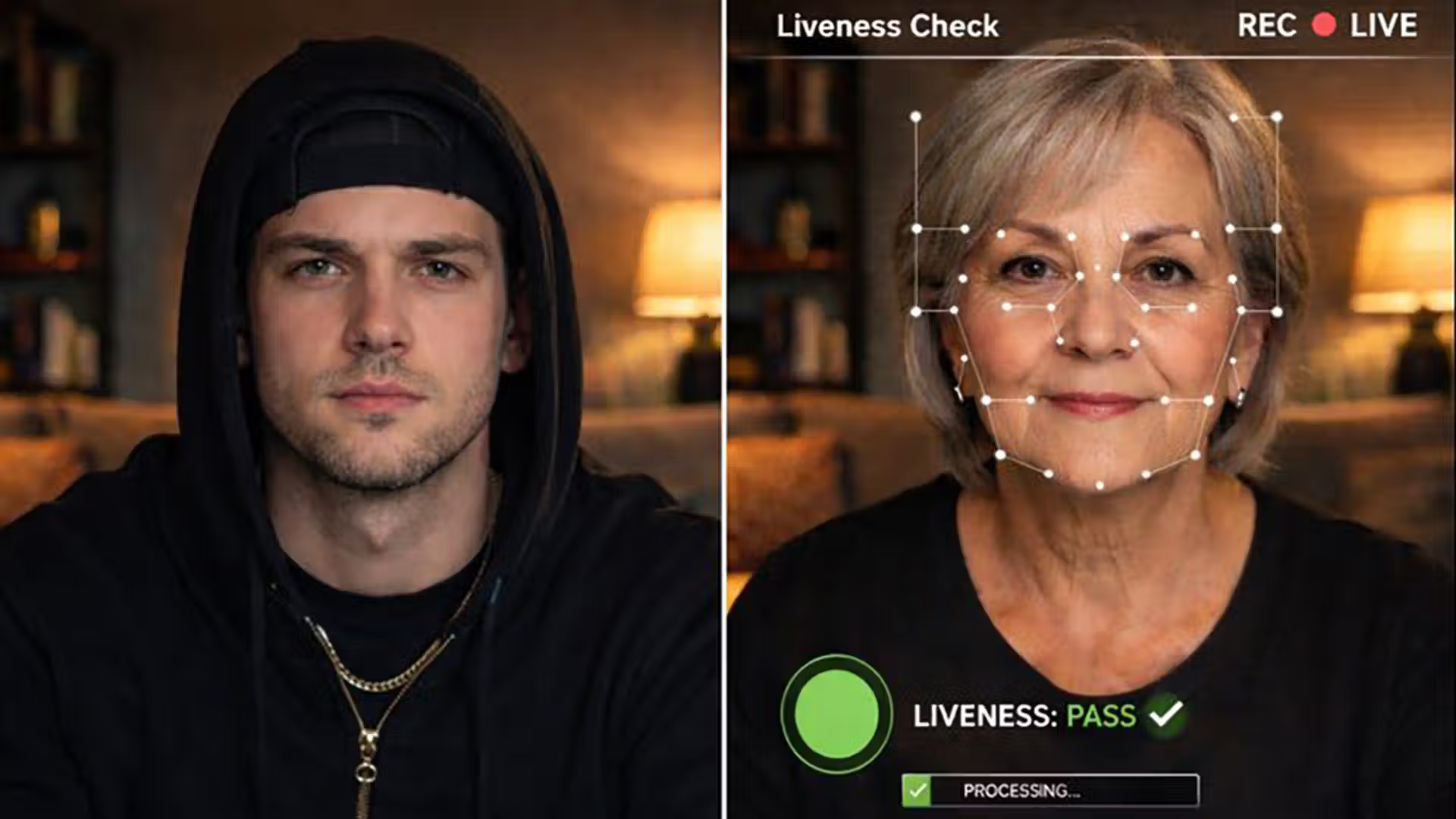

The transformation from rule-based to contextual detection

One of the report’s most significant findings concerns the stagnation of traditional monitoring architectures. Static thresholds and generic rules generate overwhelming noise. They treat every unusual transaction as equally suspicious, producing a flood of alerts that waste time and obscure genuine risk.

Napier AI’s research demonstrates that institutions moving to contextual detection models—where customer behavior, peer comparison, and historical data shape alert logic—achieve drastically improved accuracy. Context allows monitoring to evolve from binary rule firing into nuanced probability assessment.

Such transformation demands three foundations:

- Reliable data pipelines ensuring that every analytical input is current, reconciled, and version-controlled.

- Transparent models capable of explaining which factors drive risk scores and why they change.

- Integrated case feedback, feeding investigator outcomes directly back into the detection layer.

The transition is not just technical but cultural. Investigators accustomed to rote alert clearing must learn to interpret patterns and articulate analytical reasoning. Supervisors must shift evaluation metrics from volume cleared to insight generated. Training programs should focus on pattern literacy rather than rule memorization.

Institutions that succeed in this transition typically start small—selecting one high-volume scenario, cleaning the data, testing an interpretable model, and measuring improvement. Once confidence builds, they replicate the framework across products and jurisdictions. This incremental adoption minimizes disruption while proving that explainable automation can coexist with regulatory transparency.

Governance as the missing pillar of AML modernization

Napier AI’s study highlights governance weakness as the most common root cause of underperformance. Technology alone does not explain inefficiency; the absence of ownership, accountability, and coordinated oversight does. Many institutions operate in silos where risk, operations, and technology functions work at cross-purposes.

A mature AML governance framework requires explicit data stewardship, structured model management, and transparent reporting. Each critical data attribute—from customer identifiers to sanctions lists—needs an assigned owner responsible for accuracy and refresh frequency. Model governance must follow a disciplined lifecycle of design, validation, deployment, and periodic review, with all changes logged and retraceable.

Reporting lines also demand clarity. Senior management must receive outcome-based dashboards that aggregate conversion metrics, cycle times, and regulatory feedback. Internal audit should test these measures against objective performance data rather than policy checklists.

The report links weak governance with regulatory fatigue. Jurisdictions where oversight bodies lack coherent metrics tend to rely on prescriptive rules, forcing firms to demonstrate procedural compliance instead of genuine effectiveness. By contrast, when both supervisors and institutions share performance data, oversight becomes collaborative, accelerating mutual learning.

Ultimately, governance converts technology from a cost center into a strategic asset. When accountability is embedded, detection systems evolve continuously, models remain transparent, and risk appetite aligns with measurable control.

Aligning with international AML regulatory evolution

The report underscores how legislative frameworks are converging on a common principle: risk-based proportionality supported by demonstrable outcomes. Global trends point toward regulators rewarding institutions that prove control effectiveness through data rather than volume.

Across major financial centers, reforms are underway. In the United States, implementation of beneficial ownership reporting under recent corporate transparency measures complements the long-standing obligations of the Bank Secrecy Act and its modernization amendments. Institutions are expected to integrate ownership data directly into customer and network risk models.

Within the European Union, a new regulation and directive package will soon apply directly across member states. This package harmonizes due diligence standards, establishes a central supervisory authority, and extends oversight to digital asset providers. The European framework emphasizes direct accountability and data traceability, reducing fragmentation across national systems.

In the United Kingdom, the Money Laundering Regulations continue to serve as the backbone of domestic enforcement, while enhancements target improved risk assessment, reporting consistency, and technology-enabled supervision. Similar momentum exists in Canada, Australia, and Singapore, where regulators prioritize measurable efficiency and effective information-sharing over procedural conformity.

The report concludes that the next decade of AML compliance will depend on how institutions operationalize these legislative principles. Those that can document explainable decisions, maintain evidence of continuous improvement, and quantify effectiveness will define the new standard for credibility.

Turning analysis into sustained operational gains

Napier AI’s benchmark ends on a pragmatic note: the data shows improvement is attainable when programs evolve systematically rather than reactively. Institutions achieving the best performance follow a repeatable pattern of modernization.

Step one is establishing a foundation of reliable data. Every transformation fails if source information is inconsistent. Firms should begin by profiling key data sets, defining validation thresholds, and publishing weekly quality metrics.

Step two is measurable experimentation. Deploy one interpretable model on a controlled dataset, track its performance against current rules, and measure precision, recall, and workload impact. Early success builds internal trust.

Step three is process redesign. Align investigative workflow with analytical outputs, so each score or alert feeds seamlessly into narrative generation. Use structured templates that capture essential details without redundancy.

Step four is institutional learning. Outcomes from investigations must return to model development and risk assessment. This loop turns the program into a self-correcting system capable of adapting to emerging typologies.

Finally, leadership must communicate results transparently. When investigators, compliance officers, and executives see measurable evidence that smarter detection reduces effort while increasing accuracy, cultural resistance fades.

By following these principles, institutions transform compliance into a performance discipline. They shift from reactive remediation toward proactive prevention. Napier AI’s data suggests that such maturity not only improves regulatory standing but also protects profitability by eliminating wasted effort.

Related Links

- Financial Action Task Force – The FATF Recommendations

- U.S. Treasury – Beneficial Ownership Information Reporting Rule

- European Union – Regulation (EU) 2024/1620 on AML Supervision

- United Kingdom – Money Laundering Regulations 2017

- AUSTRAC – How to Comply and Report

Other FinCrime Central Articles About Industry Reports

- The EBA’s 2025 Final Report Shows Progress but Reveals Deep AML Gaps

- The Elliptic Report on Global Crypto Regulation in 2024

- Tracfin’s Latest Report Exposes Hybrid Laundering Threats for 2025

Source: Napier AI

Some of FinCrime Central’s articles may have been enriched or edited with the help of AI tools. It may contain unintentional errors.

Want to promote your brand, or need some help selecting the right solution or the right advisory firm? Email us at info@fincrimecentral.com; we probably have the right contact for you.